케라스 CONVNET

import os, shutil

original_dataset_dir = 'C:/train'

# 소규모 데이터셋을 저장할 디렉터리

base_dir = 'C:/cats_and_dogs_small'

if os.path.exists(base_dir): # 반복적인 실행을 위해 디렉토리를 삭제합니다.

shutil.rmtree(base_dir)

os.mkdir(base_dir)

# 훈련, 검증, 테스트 분할을 위한 디렉터리

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)

# 훈련용 고양이 사진 디렉터리

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

# 훈련용 강아지 사진 디렉터리

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

# 검증용 고양이 사진 디렉터리

validation_cats_dir = os.path.join(validation_dir, 'cats')

os.mkdir(validation_cats_dir)

# 검증용 강아지 사진 디렉터리

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

# 테스트용 고양이 사진 디렉터리

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

# 테스트용 강아지 사진 디렉터리

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)

# 처음 1,000개의 고양이 이미지를 train_cats_dir에 복사합니다

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_cats_dir, fname)

shutil.copyfile(src, dst)

# 다음 500개 고양이 이미지를 validation_cats_dir에 복사합니다

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

# 다음 500개 고양이 이미지를 test_cats_dir에 복사합니다

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

# 처음 1,000개의 강아지 이미지를 train_dogs_dir에 복사합니다

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

# 다음 500개 강아지 이미지를 validation_dogs_dir에 복사합니다

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

# 다음 500개 강아지 이미지를 test_dogs_dir에 복사합니다

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)

네트워크 구성

from keras import layers, models

model=models.Sequential()

model.add(layers.Conv2D(32, (3,3), activation = 'relu', input_shape=(150,150,3)))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Conv2D(64, (3,3), activation = 'relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Conv2D(128, (3,3), activation = 'relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Conv2D(128, (3,3), activation = 'relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation = 'sigmoid'))

model.summary()

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_8 (Conv2D) (None, 148, 148, 32) 896

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 (None, 74, 74, 32) 0

_________________________________________________________________

conv2d_9 (Conv2D) (None, 72, 72, 64) 18496

_________________________________________________________________

max_pooling2d_9 (MaxPooling2 (None, 36, 36, 64) 0

_________________________________________________________________

conv2d_10 (Conv2D) (None, 34, 34, 128) 73856

_________________________________________________________________

max_pooling2d_10 (MaxPooling (None, 17, 17, 128) 0

_________________________________________________________________

conv2d_11 (Conv2D) (None, 15, 15, 128) 147584

_________________________________________________________________

max_pooling2d_11 (MaxPooling (None, 7, 7, 128) 0

_________________________________________________________________

flatten_3 (Flatten) (None, 6272) 0

_________________________________________________________________

dense_5 (Dense) (None, 512) 3211776

_________________________________________________________________

dense_6 (Dense) (None, 1) 513

=================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

_________________________________________________________________

모델 훈련 설정

from keras import optimizers

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

데이터 전처리

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

#이미지파일을 전처리된 배치텐서로 바꿔주는 제네레이터

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(150,150),

batch_size=20,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150,150),

batch_size=20,

class_mode='binary')

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

for data_batch, labels_batch in train_generator:

print('배치 데이터 크기: ', data_batch.shape)

print('배치 레이블 크기: ', labels_batch.shape)

break

배치 데이터 크기: (20, 150, 150, 3)

배치 레이블 크기: (20,)

모델 훈련

history = model.fit_generator(

train_generator,

steps_per_epoch=100,#train데이터가 2000개.

epochs=30,

validation_data = validation_generator,

validation_steps=50) #위에서 검증제네레이터의 배치를 20개씩 했으므로 50번 반복해야 전체(1000)사용

Epoch 1/30

100/100 [==============================] - 54s 542ms/step - loss: 0.6493 - acc: 0.6235 - val_loss: 0.6758 - val_acc: 0.6100

Epoch 2/30

100/100 [==============================] - 59s 587ms/step - loss: 0.5981 - acc: 0.6860 - val_loss: 0.7680 - val_acc: 0.6440

Epoch 3/30

100/100 [==============================] - 60s 604ms/step - loss: 0.5637 - acc: 0.7095 - val_loss: 0.6008 - val_acc: 0.6760

Epoch 4/30

100/100 [==============================] - 71s 710ms/step - loss: 0.5370 - acc: 0.7245 - val_loss: 0.8597 - val_acc: 0.5580

Epoch 5/30

100/100 [==============================] - 66s 656ms/step - loss: 0.5105 - acc: 0.7440 - val_loss: 0.7412 - val_acc: 0.6850

Epoch 6/30

100/100 [==============================] - 57s 569ms/step - loss: 0.4803 - acc: 0.7750 - val_loss: 0.4612 - val_acc: 0.6990

Epoch 7/30

100/100 [==============================] - 57s 570ms/step - loss: 0.4633 - acc: 0.7765 - val_loss: 0.6596 - val_acc: 0.7060

Epoch 8/30

100/100 [==============================] - 57s 574ms/step - loss: 0.4347 - acc: 0.7955 - val_loss: 0.3556 - val_acc: 0.6900

Epoch 9/30

100/100 [==============================] - 58s 577ms/step - loss: 0.4131 - acc: 0.8150 - val_loss: 0.6390 - val_acc: 0.7260

Epoch 10/30

100/100 [==============================] - 57s 571ms/step - loss: 0.3899 - acc: 0.8265 - val_loss: 0.3724 - val_acc: 0.7170

Epoch 11/30

100/100 [==============================] - 57s 571ms/step - loss: 0.3711 - acc: 0.8310 - val_loss: 0.5413 - val_acc: 0.7270

Epoch 12/30

100/100 [==============================] - 57s 571ms/step - loss: 0.3530 - acc: 0.8465 - val_loss: 0.6112 - val_acc: 0.7320

Epoch 13/30

100/100 [==============================] - 57s 570ms/step - loss: 0.3255 - acc: 0.8610 - val_loss: 0.3573 - val_acc: 0.6850

Epoch 14/30

100/100 [==============================] - 58s 577ms/step - loss: 0.3023 - acc: 0.8795 - val_loss: 0.5398 - val_acc: 0.7220

Epoch 15/30

100/100 [==============================] - 57s 569ms/step - loss: 0.2851 - acc: 0.8855 - val_loss: 0.3826 - val_acc: 0.7430

Epoch 16/30

100/100 [==============================] - 57s 574ms/step - loss: 0.2550 - acc: 0.8990 - val_loss: 0.4806 - val_acc: 0.7360

Epoch 17/30

100/100 [==============================] - 57s 568ms/step - loss: 0.2451 - acc: 0.9015 - val_loss: 0.8120 - val_acc: 0.7270

Epoch 18/30

100/100 [==============================] - 57s 570ms/step - loss: 0.2185 - acc: 0.9200 - val_loss: 0.8893 - val_acc: 0.7420

Epoch 19/30

100/100 [==============================] - 58s 581ms/step - loss: 0.1961 - acc: 0.9275 - val_loss: 0.5893 - val_acc: 0.7460

Epoch 20/30

100/100 [==============================] - 57s 571ms/step - loss: 0.1765 - acc: 0.9385 - val_loss: 0.3419 - val_acc: 0.7400

Epoch 21/30

100/100 [==============================] - 57s 570ms/step - loss: 0.1609 - acc: 0.9440 - val_loss: 1.1847 - val_acc: 0.7440

Epoch 22/30

100/100 [==============================] - 57s 568ms/step - loss: 0.1411 - acc: 0.9560 - val_loss: 0.5061 - val_acc: 0.7500

Epoch 23/30

100/100 [==============================] - 57s 571ms/step - loss: 0.1259 - acc: 0.9580 - val_loss: 0.7856 - val_acc: 0.7430

Epoch 24/30

100/100 [==============================] - 57s 574ms/step - loss: 0.1075 - acc: 0.9670 - val_loss: 1.5615 - val_acc: 0.7460

Epoch 25/30

100/100 [==============================] - 57s 569ms/step - loss: 0.0930 - acc: 0.9745 - val_loss: 1.0389 - val_acc: 0.7530

Epoch 26/30

100/100 [==============================] - 57s 569ms/step - loss: 0.0897 - acc: 0.9725 - val_loss: 0.2108 - val_acc: 0.7270

Epoch 27/30

100/100 [==============================] - 57s 570ms/step - loss: 0.0732 - acc: 0.9765 - val_loss: 0.4359 - val_acc: 0.7350

Epoch 28/30

100/100 [==============================] - 57s 569ms/step - loss: 0.0589 - acc: 0.9860 - val_loss: 0.4736 - val_acc: 0.7590

Epoch 29/30

100/100 [==============================] - 57s 569ms/step - loss: 0.0513 - acc: 0.9860 - val_loss: 0.6719 - val_acc: 0.7570

Epoch 30/30

100/100 [==============================] - 57s 565ms/step - loss: 0.0414 - acc: 0.9905 - val_loss: 0.8831 - val_acc: 0.7390

model.save('cats_and_dogs_small_1.h5')

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc)+1)

plt.plot(epochs , acc, 'bo', label='Training acc')#x, y, 줄, label

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

epoch = 5 정도부터 정확도 70정도로 변함이없음

#

데이터 증식

여러 랜덤한 변환으로 샘플의 갯수를 늘림

datagen= ImageDataGenerator(

rotation_range=20, #사진 회전 각도

width_shift_range=0.1, #수평 평행 이동

height_shift_range=0.1,#수직 평행 이동

shear_range=0.1, #전단변환 각도

zoom_range=0.1, #확대 범위

horizontal_flip=True, #이미지를 수평으로 뒤집음

fill_mode = 'nearest') #회전이나 이동으로 인해 새롭게 생성해야 할 픽셀을 채울 전략

from keras.preprocessing import image #이미지 전처리 유틸 모듈

fnames = sorted([os.path.join(train_cats_dir, fname) for

fname in os.listdir(train_cats_dir)])

img_path = fnames[892]

img = image.load_img(img_path, target_size=(150, 150)) #이미지 읽고 크기 변경

x= image.img_to_array(img) # 150,150,3 크기의 넘파이 배열로 변환

x= x.reshape((1,) +x.shape) # 1,150,150,3 크기로 변환

i=0

#랜덤하게 변환된 이미지 배치 무한히 생성

for batch in datagen.flow(x, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(image.array_to_img(batch[0]))

i += 1

if i%4==0:

break

plt.show()

데이터 증식을 했지만 원본 이미지수가 적기 때문에 데이터간 상호연관이 큼

과대적합 억제를 위한 Dropout층 추가

model = models.Sequential()

model.add(layers.Conv2D(32, (3,3), activation='relu',

input_shape=(150,150,3)))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Conv2D(64, (3,3), activation='relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Conv2D(128, (3,3), activation='relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Conv2D(128, (3,3), activation='relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(loss = 'binary_crossentropy',

optimizer = optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip = True,)

test_datagen=ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(150,150),

batch_size=32,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150,150),

batch_size=32,

class_mode='binary')

history=model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=100,

validation_data=validation_generator,

validation_steps=50)

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

Epoch 1/100

100/100 [==============================] - 92s 925ms/step - loss: 0.6887 - acc: 0.5283 - val_loss: 0.7003 - val_acc: 0.5596

Epoch 2/100

100/100 [==============================] - 92s 925ms/step - loss: 0.6626 - acc: 0.5830 - val_loss: 0.6613 - val_acc: 0.5760

Epoch 3/100

100/100 [==============================] - 93s 925ms/step - loss: 0.6517 - acc: 0.5997 - val_loss: 0.6276 - val_acc: 0.6301

Epoch 4/100

100/100 [==============================] - 94s 940ms/step - loss: 0.6286 - acc: 0.6451 - val_loss: 0.6294 - val_acc: 0.6289

Epoch 5/100

100/100 [==============================] - 92s 922ms/step - loss: 0.6138 - acc: 0.6585 - val_loss: 0.5954 - val_acc: 0.6567

Epoch 6/100

100/100 [==============================] - 93s 932ms/step - loss: 0.6028 - acc: 0.6660 - val_loss: 0.4447 - val_acc: 0.6701

Epoch 7/100

100/100 [==============================] - 93s 931ms/step - loss: 0.5955 - acc: 0.6812 - val_loss: 0.6383 - val_acc: 0.6859

Epoch 8/100

100/100 [==============================] - 92s 918ms/step - loss: 0.5922 - acc: 0.6771 - val_loss: 0.7146 - val_acc: 0.6772

Epoch 9/100

100/100 [==============================] - 92s 917ms/step - loss: 0.5721 - acc: 0.6932 - val_loss: 0.7393 - val_acc: 0.6134

Epoch 10/100

100/100 [==============================] - 92s 916ms/step - loss: 0.5715 - acc: 0.7063 - val_loss: 0.5376 - val_acc: 0.6948

Epoch 11/100

100/100 [==============================] - 93s 928ms/step - loss: 0.5663 - acc: 0.7030 - val_loss: 0.6372 - val_acc: 0.7249

Epoch 12/100

100/100 [==============================] - 92s 922ms/step - loss: 0.5654 - acc: 0.6932 - val_loss: 0.5627 - val_acc: 0.6459

Epoch 13/100

100/100 [==============================] - 91s 913ms/step - loss: 0.5576 - acc: 0.7105 - val_loss: 0.4204 - val_acc: 0.7178

Epoch 14/100

100/100 [==============================] - 113s 1s/step - loss: 0.5539 - acc: 0.7162 - val_loss: 0.4409 - val_acc: 0.7487

Epoch 15/100

100/100 [==============================] - 137s 1s/step - loss: 0.5513 - acc: 0.7114 - val_loss: 0.5906 - val_acc: 0.7049

Epoch 16/100

100/100 [==============================] - 139s 1s/step - loss: 0.5382 - acc: 0.7279 - val_loss: 0.4660 - val_acc: 0.7481

Epoch 17/100

100/100 [==============================] - 137s 1s/step - loss: 0.5451 - acc: 0.7220 - val_loss: 0.4494 - val_acc: 0.7341

Epoch 18/100

100/100 [==============================] - 141s 1s/step - loss: 0.5201 - acc: 0.7424 - val_loss: 0.5684 - val_acc: 0.7500

Epoch 19/100

100/100 [==============================] - 139s 1s/step - loss: 0.5193 - acc: 0.7409 - val_loss: 0.4637 - val_acc: 0.7627

Epoch 20/100

100/100 [==============================] - 137s 1s/step - loss: 0.5231 - acc: 0.7364 - val_loss: 0.3562 - val_acc: 0.7332

Epoch 21/100

100/100 [==============================] - 31673s 317s/step - loss: 0.5229 - acc: 0.7406 - val_loss: 0.4830 - val_acc: 0.7678

Epoch 22/100

100/100 [==============================] - 111s 1s/step - loss: 0.5097 - acc: 0.7427 - val_loss: 0.5673 - val_acc: 0.7732

Epoch 23/100

100/100 [==============================] - 117s 1s/step - loss: 0.5033 - acc: 0.7657 - val_loss: 0.4790 - val_acc: 0.7354

Epoch 24/100

100/100 [==============================] - 113s 1s/step - loss: 0.4987 - acc: 0.7624 - val_loss: 0.4347 - val_acc: 0.7629

Epoch 25/100

100/100 [==============================] - 109s 1s/step - loss: 0.5046 - acc: 0.7503 - val_loss: 0.4750 - val_acc: 0.7384

Epoch 26/100

100/100 [==============================] - 109s 1s/step - loss: 0.5043 - acc: 0.7547 - val_loss: 0.5126 - val_acc: 0.7538

Epoch 27/100

100/100 [==============================] - 109s 1s/step - loss: 0.4973 - acc: 0.7622 - val_loss: 0.3817 - val_acc: 0.7713

Epoch 28/100

100/100 [==============================] - 108s 1s/step - loss: 0.4907 - acc: 0.7652 - val_loss: 0.4684 - val_acc: 0.7786

Epoch 29/100

100/100 [==============================] - 108s 1s/step - loss: 0.4908 - acc: 0.7667 - val_loss: 0.3464 - val_acc: 0.7281

Epoch 30/100

100/100 [==============================] - 111s 1s/step - loss: 0.4846 - acc: 0.7660 - val_loss: 0.3983 - val_acc: 0.7582

Epoch 31/100

100/100 [==============================] - 108s 1s/step - loss: 0.4824 - acc: 0.7654 - val_loss: 0.3176 - val_acc: 0.7841

Epoch 32/100

100/100 [==============================] - 106s 1s/step - loss: 0.4890 - acc: 0.7614 - val_loss: 0.4906 - val_acc: 0.7094

Epoch 33/100

100/100 [==============================] - 110s 1s/step - loss: 0.4720 - acc: 0.7660 - val_loss: 0.5354 - val_acc: 0.7931

Epoch 34/100

100/100 [==============================] - 107s 1s/step - loss: 0.4662 - acc: 0.7721 - val_loss: 0.3767 - val_acc: 0.7957

Epoch 35/100

100/100 [==============================] - 112s 1s/step - loss: 0.4815 - acc: 0.7661 - val_loss: 0.5181 - val_acc: 0.8001

Epoch 36/100

100/100 [==============================] - 127s 1s/step - loss: 0.4580 - acc: 0.7871 - val_loss: 0.3505 - val_acc: 0.7893

Epoch 37/100

100/100 [==============================] - 114s 1s/step - loss: 0.4707 - acc: 0.7648 - val_loss: 0.3029 - val_acc: 0.7868

Epoch 38/100

100/100 [==============================] - 137s 1s/step - loss: 0.4645 - acc: 0.7833 - val_loss: 0.4289 - val_acc: 0.7706

Epoch 39/100

100/100 [==============================] - 138s 1s/step - loss: 0.4624 - acc: 0.7839 - val_loss: 0.6486 - val_acc: 0.7595

Epoch 40/100

100/100 [==============================] - 124s 1s/step - loss: 0.4413 - acc: 0.7905 - val_loss: 0.5328 - val_acc: 0.7719

Epoch 41/100

100/100 [==============================] - 127s 1s/step - loss: 0.4578 - acc: 0.7863 - val_loss: 0.7052 - val_acc: 0.7700

Epoch 42/100

100/100 [==============================] - 130s 1s/step - loss: 0.4422 - acc: 0.7926 - val_loss: 0.5355 - val_acc: 0.7957

Epoch 43/100

100/100 [==============================] - 127s 1s/step - loss: 0.4352 - acc: 0.8018 - val_loss: 0.5226 - val_acc: 0.7764

Epoch 44/100

100/100 [==============================] - 117s 1s/step - loss: 0.4456 - acc: 0.7937 - val_loss: 0.5422 - val_acc: 0.7862

Epoch 45/100

100/100 [==============================] - 140s 1s/step - loss: 0.4314 - acc: 0.8002 - val_loss: 0.2976 - val_acc: 0.7906

Epoch 46/100

100/100 [==============================] - 138s 1s/step - loss: 0.4355 - acc: 0.7964 - val_loss: 0.3651 - val_acc: 0.7951

Epoch 47/100

100/100 [==============================] - 129s 1s/step - loss: 0.4251 - acc: 0.8033 - val_loss: 0.3866 - val_acc: 0.7545

Epoch 48/100

100/100 [==============================] - 122s 1s/step - loss: 0.4355 - acc: 0.7993 - val_loss: 0.3938 - val_acc: 0.7957

Epoch 49/100

100/100 [==============================] - 126s 1s/step - loss: 0.4212 - acc: 0.8103 - val_loss: 0.4630 - val_acc: 0.7836

Epoch 50/100

100/100 [==============================] - 136s 1s/step - loss: 0.4326 - acc: 0.7992 - val_loss: 0.2479 - val_acc: 0.8177

Epoch 51/100

100/100 [==============================] - 131s 1s/step - loss: 0.4219 - acc: 0.8043 - val_loss: 0.4381 - val_acc: 0.7874

Epoch 52/100

100/100 [==============================] - 134s 1s/step - loss: 0.4237 - acc: 0.8052 - val_loss: 0.3356 - val_acc: 0.7500

Epoch 53/100

100/100 [==============================] - 128s 1s/step - loss: 0.4218 - acc: 0.8068 - val_loss: 0.6825 - val_acc: 0.7735

Epoch 54/100

100/100 [==============================] - 132s 1s/step - loss: 0.4140 - acc: 0.8084 - val_loss: 0.7228 - val_acc: 0.8119

Epoch 55/100

100/100 [==============================] - 133s 1s/step - loss: 0.4238 - acc: 0.8009 - val_loss: 0.4984 - val_acc: 0.8160

Epoch 56/100

100/100 [==============================] - 119s 1s/step - loss: 0.4142 - acc: 0.8144 - val_loss: 0.6107 - val_acc: 0.7996

Epoch 57/100

100/100 [==============================] - 116s 1s/step - loss: 0.4121 - acc: 0.8103 - val_loss: 0.4271 - val_acc: 0.8015

Epoch 58/100

100/100 [==============================] - 115s 1s/step - loss: 0.4109 - acc: 0.8122 - val_loss: 0.5391 - val_acc: 0.7995

Epoch 59/100

100/100 [==============================] - 94s 940ms/step - loss: 0.3982 - acc: 0.8153 - val_loss: 0.2988 - val_acc: 0.8170

Epoch 60/100

100/100 [==============================] - 99s 987ms/step - loss: 0.4110 - acc: 0.8125 - val_loss: 0.3002 - val_acc: 0.7824

Epoch 61/100

100/100 [==============================] - 92s 923ms/step - loss: 0.4004 - acc: 0.8200 - val_loss: 0.4444 - val_acc: 0.8215

Epoch 62/100

100/100 [==============================] - 93s 929ms/step - loss: 0.4038 - acc: 0.8150 - val_loss: 0.5618 - val_acc: 0.7874

Epoch 63/100

100/100 [==============================] - 92s 923ms/step - loss: 0.3998 - acc: 0.8213 - val_loss: 0.3710 - val_acc: 0.7899

Epoch 64/100

100/100 [==============================] - 91s 911ms/step - loss: 0.3910 - acc: 0.8172 - val_loss: 0.7399 - val_acc: 0.7996

Epoch 65/100

100/100 [==============================] - 92s 923ms/step - loss: 0.3966 - acc: 0.8207 - val_loss: 0.5853 - val_acc: 0.8115

Epoch 66/100

100/100 [==============================] - 92s 918ms/step - loss: 0.3904 - acc: 0.8191 - val_loss: 0.5106 - val_acc: 0.7784

Epoch 67/100

100/100 [==============================] - 92s 923ms/step - loss: 0.3853 - acc: 0.8239 - val_loss: 0.5523 - val_acc: 0.8071

Epoch 68/100

100/100 [==============================] - 92s 919ms/step - loss: 0.3860 - acc: 0.8286 - val_loss: 0.6057 - val_acc: 0.8177

Epoch 69/100

100/100 [==============================] - 94s 936ms/step - loss: 0.3923 - acc: 0.8239 - val_loss: 0.3772 - val_acc: 0.8147

Epoch 70/100

100/100 [==============================] - 92s 924ms/step - loss: 0.3770 - acc: 0.8320 - val_loss: 0.5353 - val_acc: 0.8222

Epoch 71/100

100/100 [==============================] - 91s 913ms/step - loss: 0.3784 - acc: 0.8318 - val_loss: 0.4160 - val_acc: 0.8261

Epoch 72/100

100/100 [==============================] - 92s 925ms/step - loss: 0.3635 - acc: 0.8351 - val_loss: 0.5472 - val_acc: 0.8235

Epoch 73/100

100/100 [==============================] - 91s 910ms/step - loss: 0.3740 - acc: 0.8299 - val_loss: 0.6288 - val_acc: 0.7738

Epoch 74/100

100/100 [==============================] - 93s 926ms/step - loss: 0.3785 - acc: 0.8311 - val_loss: 0.2810 - val_acc: 0.8147

Epoch 75/100

100/100 [==============================] - 91s 914ms/step - loss: 0.3648 - acc: 0.8339 - val_loss: 0.2999 - val_acc: 0.8293

Epoch 76/100

100/100 [==============================] - 91s 905ms/step - loss: 0.3730 - acc: 0.8349 - val_loss: 0.4934 - val_acc: 0.7881

Epoch 77/100

100/100 [==============================] - 92s 923ms/step - loss: 0.3633 - acc: 0.8455 - val_loss: 0.4755 - val_acc: 0.8086

Epoch 78/100

100/100 [==============================] - 91s 908ms/step - loss: 0.3749 - acc: 0.8302 - val_loss: 0.5254 - val_acc: 0.7443

Epoch 79/100

100/100 [==============================] - 92s 922ms/step - loss: 0.3677 - acc: 0.8361 - val_loss: 0.6599 - val_acc: 0.7661

Epoch 80/100

100/100 [==============================] - 90s 903ms/step - loss: 0.3610 - acc: 0.8409 - val_loss: 0.4462 - val_acc: 0.8119

Epoch 81/100

100/100 [==============================] - 92s 925ms/step - loss: 0.3615 - acc: 0.8384 - val_loss: 0.4108 - val_acc: 0.8084

Epoch 82/100

100/100 [==============================] - 92s 917ms/step - loss: 0.3466 - acc: 0.8489 - val_loss: 0.3377 - val_acc: 0.8028

Epoch 83/100

100/100 [==============================] - 90s 905ms/step - loss: 0.3570 - acc: 0.8415 - val_loss: 0.3121 - val_acc: 0.8261

Epoch 84/100

100/100 [==============================] - 92s 918ms/step - loss: 0.3508 - acc: 0.8464 - val_loss: 0.7422 - val_acc: 0.8048

Epoch 85/100

100/100 [==============================] - 91s 906ms/step - loss: 0.3523 - acc: 0.8456 - val_loss: 0.3180 - val_acc: 0.8223

Epoch 86/100

100/100 [==============================] - 92s 923ms/step - loss: 0.3625 - acc: 0.8359 - val_loss: 0.4887 - val_acc: 0.8099

Epoch 87/100

100/100 [==============================] - 3650s 37s/step - loss: 0.3411 - acc: 0.8492 - val_loss: 0.3528 - val_acc: 0.7633

Epoch 88/100

100/100 [==============================] - 104s 1s/step - loss: 0.3558 - acc: 0.8456 - val_loss: 0.2655 - val_acc: 0.8396

Epoch 89/100

100/100 [==============================] - 100s 1s/step - loss: 0.3324 - acc: 0.8502 - val_loss: 0.3610 - val_acc: 0.8177

Epoch 90/100

100/100 [==============================] - 103s 1s/step - loss: 0.3557 - acc: 0.8403 - val_loss: 0.3775 - val_acc: 0.8255

Epoch 91/100

100/100 [==============================] - 110s 1s/step - loss: 0.3381 - acc: 0.8494 - val_loss: 0.5460 - val_acc: 0.8370

Epoch 92/100

100/100 [==============================] - 107s 1s/step - loss: 0.3438 - acc: 0.8436 - val_loss: 0.4710 - val_acc: 0.8338

Epoch 93/100

100/100 [==============================] - 105s 1s/step - loss: 0.3300 - acc: 0.8549 - val_loss: 0.3508 - val_acc: 0.8370

Epoch 94/100

100/100 [==============================] - 107s 1s/step - loss: 0.3310 - acc: 0.8516 - val_loss: 0.2825 - val_acc: 0.8319

Epoch 95/100

100/100 [==============================] - 105s 1s/step - loss: 0.3412 - acc: 0.8510 - val_loss: 0.3124 - val_acc: 0.8376

Epoch 96/100

100/100 [==============================] - 107s 1s/step - loss: 0.3275 - acc: 0.8551 - val_loss: 0.1276 - val_acc: 0.8286

Epoch 97/100

100/100 [==============================] - 105s 1s/step - loss: 0.3362 - acc: 0.8552 - val_loss: 0.3917 - val_acc: 0.8331

Epoch 98/100

100/100 [==============================] - 107s 1s/step - loss: 0.3110 - acc: 0.8608 - val_loss: 0.4124 - val_acc: 0.8254

Epoch 99/100

100/100 [==============================] - 106s 1s/step - loss: 0.3344 - acc: 0.8496 - val_loss: 0.3453 - val_acc: 0.8115

Epoch 100/100

100/100 [==============================] - 104s 1s/step - loss: 0.3332 - acc: 0.8573 - val_loss: 0.3846 - val_acc: 0.8177

model.save('cats_and_dogs_small_2.h5')

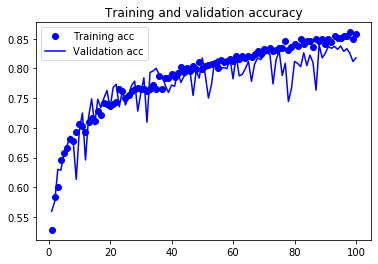

acc=history.history['acc']

val_acc=history.history['val_acc']

loss=history.history['loss']

val_loss=history.history['val_loss']

epochs=range(1,len(acc)+1)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()#위에 이미 figure가 있으니 새로운 figure선언하여 새 그래프를 그릴수있게함

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

<matplotlib.legend.Legend at 0x1de3976fc48>

Comments